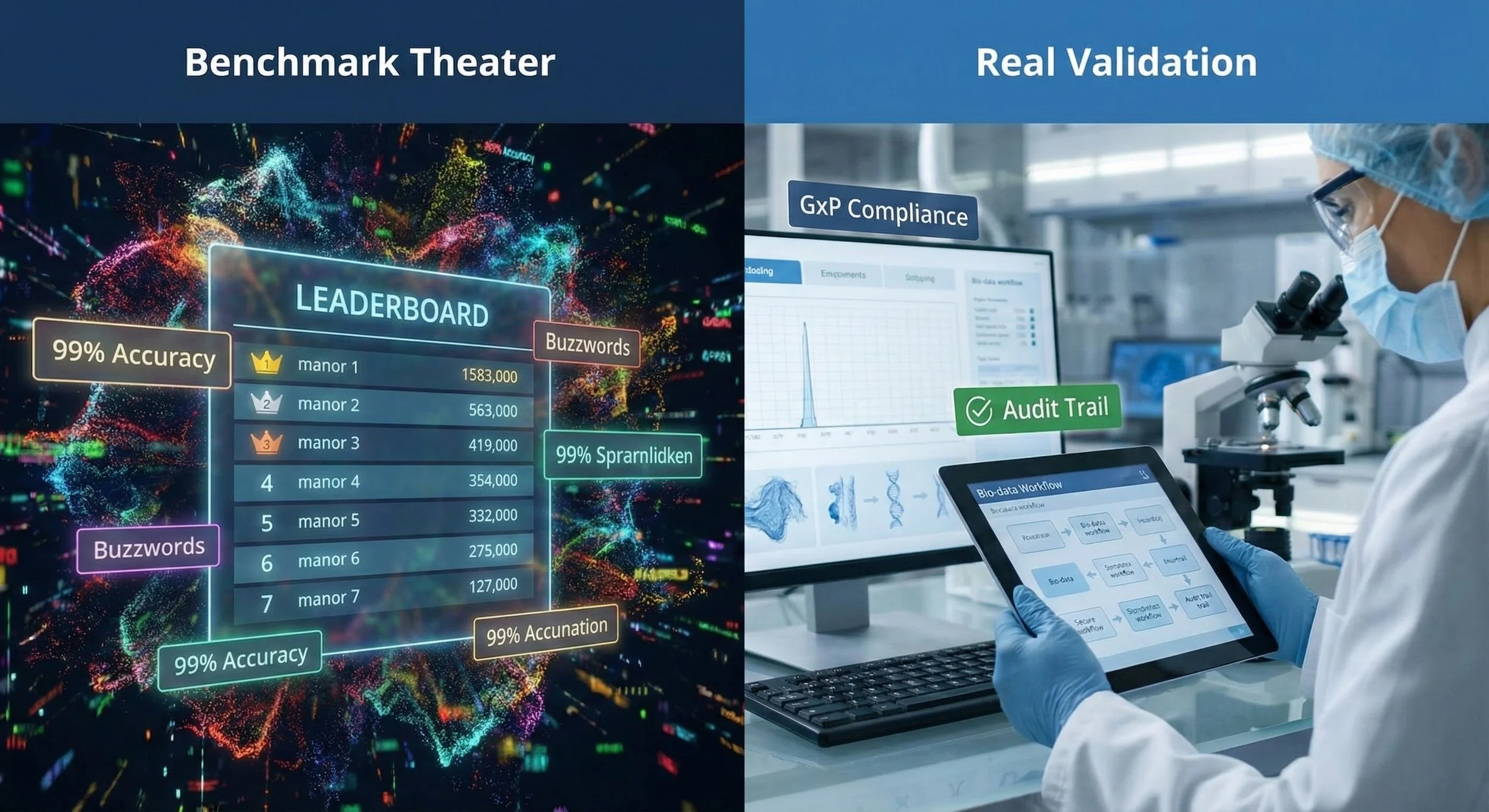

Validated ≠ Leaderboards

Benchmarks are useful—but they don’t prove your model is ready for real work. I design fit‑for‑purpose evaluations and the operational controls that make AI/LLMs both useful in R&D and auditable when stakes are higher.

What I Bring

Context of Use → risk: we define the decision, users, and failure modes so evidence matches impact.

Traceable, domain data: eval sets with lineage (ALCOA+), leakage checks, and realistic edge cases.

Pre‑registered acceptance criteria: metrics, thresholds, sample sizes—agreed up front.

HITL built‑in: review thresholds, work instructions, training.

Lifecycle ready: monitoring/drift KPIs, owners, alerts, golden‑set cadence.

Change control for retraining: triggers, impact assessment, rollback, release notes.

Curious if you’re fit-for-purpose today?

Book a 20‑minute fit check. I’ll walk through the scorecard, flag gaps, and recommend the smallest experiment that proves value.

Prefer email? Send your use case to kayla@kaylabritt.com (3–5 sentences)